A lot of customers might want to setup automation, for installing common packages and making configurations for vanilla images. One way to provide that automation is to use configdrive which allows you to execute commands post server creation, as well as to install certain packages that are required.

The good thing about using this is you can get a server up and running with a single line of automation, and of course your configuration file (which contains all the automation). Here is the steps you need to do it, and it is actually really rather very simple!

Step 1. Create Automation File .cloud-config

#cloud-config

packages:

- apache2

- php5

- php5-mysql

- mysql-server

runcmd:

- wget http://wordpress.org/latest.tar.gz -P /tmp/

- tar -zxf /tmp/latest.tar.gz -C /var/www/

- mysql -e "create database wordpress; create user 'wpuser'@'localhost' identified by 'changemetoo'; grant all privileges on wordpress . \* to 'wpuser'@'localhost'; flush privileges;"

- mysql -e "drop database test; drop user 'test'@'localhost'; flush privileges;"

- mysqladmin -u root password 'changeme'

Install apache2, php5, php-mysql, mysqlserver, download wordpress to /tmp and then extract it into main /var/www folder. Create the wordpress database and user name.

Step 2: Create server using cloud-config in Supernova via the Rackspace API

(not hard! easy!)

supernova customer boot --config-drive=true --flavor performance1-1 --image 09de0a66-3156-48b4-90a5-1cf25a905207 --user-data cloud-config testing-configdrive

+--------------------------------------+-------------------------------------------------------------------------------+

| Property | Value |

+--------------------------------------+-------------------------------------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| RAX-PUBLIC-IP-ZONE-ID:publicIPZoneId | |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | SECUREPASSWORDHERE |

| config_drive | True |

| created | 2015-10-20T11:10:23Z |

| flavor | 1 GB Performance (performance1-1) |

| hostId | |

| id | ef084d0f-70cc-4366-b348-daf987909899 |

| image | Ubuntu 14.04 LTS (Trusty Tahr) (PVHVM) (09de0a66-3156-48b4-90a5-1cf25a905207) |

| key_name | - |

| metadata | {} |

| name | testing-configdrive |

| progress | 0 |

| status | BUILD |

| tenant_id | 10000000 |

| updated | 2015-10-20T11:10:24Z |

| user_id | 05b18e859cad42bb9a5a35ad0a6fba2f |

+--------------------------------------+-------------------------------------------------------------------------------+

In my case my supernova was setup already, however I have another article on how to setup supernova on this site, just take a look there for how to install it. MY supernova configuration looks like (with the API KEY removed ofcourse!)

[customer]

OS_AUTH_URL=https://identity.api.rackspacecloud.com/v2.0/

OS_AUTH_SYSTEM=rackspace

#OS_COMPUTE_API_VERSION=1.1

NOVA_RAX_AUTH=1

OS_REGION_NAME=LON

NOVA_SERVICE_NAME=cloudServersOpenStack

OS_PASSWORD=90bb3pd0a7MYMOCKAPIKEYc419572678abba136a2

OS_USERNAME=mycloudusername

OS_TENANT_NAME=100000

OS_TENANT_NAME is your customer number, take it from the url in mycloud.rackspace.com after logging on. OS_PASSWORD is your API KEY, get it from the account settings url in mycloud.rackspace.co.uk, and your OS_USERNAME, that is your username that you use to login to the Rackspace mycloud control panel. Simples!

Step 3: Confirm your server built as expected

root@testing-configdrive:~# ls /tmp

latest.tar.gz

root@testing-configdrive:~# ls /var/www/wordpress

index.php readme.html wp-admin wp-comments-post.php wp-content wp-includes wp-load.php wp-mail.php wp-signup.php xmlrpc.php

license.txt wp-activate.php wp-blog-header.php wp-config-sample.php wp-cron.php wp-links-opml.php wp-login.php wp-settings.php wp-trackback.php

In my case, I noticed that everything went fine and ‘wordpress’ installed to /var/www just fine. But what if I wanted wordpress www dir configured to html by default? That’s pretty easy. It’s just an extra.

mv /var/www/html /var/www/html_old

mv /var/www/wordpress /var/www/html

So lets add that to our automation script:

#cloud-config

packages:

- apache2

- php5

- php5-mysql

- mysql-server

runcmd:

- wget http://wordpress.org/latest.tar.gz -P /tmp/

- tar -zxf /tmp/latest.tar.gz -C /var/www/; mv /var/www/html /var/www/html_old; mv /var/www/wordpress /var/www/html

- mysql -e "create database wordpress; create user 'wpuser'@'localhost' identified by 'changemetoo'; grant all privileges on wordpress . \* to 'wpuser'@'localhost'; flush privileges;"

- mysql -e "drop database test; drop user 'test'@'localhost'; flush privileges;"

- mysqladmin -u root password 'changeme'

Job done. Just a case of re-running the command now:

supernova customer boot --config-drive=true --flavor performance1-1 --image 09de0a66-3156-48b4-90a5-1cf25a905207 --user-data cloud-config testing-configdrive

And then checking that our wordpress website loads correctly without any additional configuration or having to login to the machine! Not bad automation thar.

I could have quite easily achieved something like this by using the API directly. No supernova and no filesystem. Just the raw command! Yeah that’d be better than not bad!

Creating Post BUILD Automation thru API via CURL

Here’s how to do it.

Step 1. Prepare your execution script by converting it to BASE_64 character encoding

Unencoded Script:

#cloud-config

packages:

- apache2

- php5

- php5-mysql

- mysql-server

runcmd:

- wget http://wordpress.org/latest.tar.gz -P /tmp/

- tar -zxf /tmp/latest.tar.gz -C /var/www/; mv /var/www/html /var/www/html_old; mv /var/www/wordpress /var/www/html

- mysql -e "create database wordpress; create user 'wpuser'@'localhost' identified by 'changemetoo'; grant all privileges on wordpress . \* to 'wpuser'@'localhost'; flush privileges;"

- mysql -e "drop database test; drop user 'test'@'localhost'; flush privileges;"

- mysqladmin -u root password 'changeme'

Encoded Script:

I2Nsb3VkLWNvbmZpZw0KDQpwYWNrYWdlczoNCg0KIC0gYXBhY2hlMg0KIC0gcGhwNQ0KIC0gcGhwNS1teXNxbA0KIC0gbXlzcWwtc2VydmVyDQoNCnJ1bmNtZDoNCg0KIC0gd2dldCBodHRwOi8vd29yZHByZXNzLm9yZy9sYXRlc3QudGFyLmd6IC1QIC90bXAvDQogLSB0YXIgLXp4ZiAvdG1wL2xhdGVzdC50YXIuZ3ogLUMgL3Zhci93d3cvIDsgbXYgL3Zhci93d3cvaHRtbCAvdmFyL3d3dy9odG1sX29sZDsgbXYgL3Zhci93d3cvd29yZHByZXNzIC92YXIvd3d3L2h0bWwNCiAtIG15c3FsIC1lICJjcmVhdGUgZGF0YWJhc2Ugd29yZHByZXNzOyBjcmVhdGUgdXNlciAnd3B1c2VyJ0AnbG9jYWxob3N0JyBpZGVudGlmaWVkIGJ5ICdjaGFuZ2VtZXRvbyc7IGdyYW50IGFsbCBwcml2aWxlZ2VzIG9uIHdvcmRwcmVzcyAuIFwqIHRvICd3cHVzZXInQCdsb2NhbGhvc3QnOyBmbHVzaCBwcml2aWxlZ2VzOyINCiAtIG15c3FsIC1lICJkcm9wIGRhdGFiYXNlIHRlc3Q7IGRyb3AgdXNlciAndGVzdCdAJ2xvY2FsaG9zdCc7IGZsdXNoIHByaXZpbGVnZXM7Ig0KIC0gbXlzcWxhZG1pbiAtdSByb290IHBhc3N3b3JkICdjaGFuZ2VtZSc=

Step 2: Get Authorization token from identity API endpoint

Command:

$ curl -s https://identity.api.rackspacecloud.com/v2.0/tokens -X 'POST' -d '{"auth":{"passwordCredentials":{"username":"adambull", "password":"superBRAIN%!7912105!"}}}' -H "Content-Type: application/json"

Response:

{"access":{"token":{"id":"AAD4gu67KlOPQeRSTJVC_8MLrTomBCxN6HdmVhlI4y9SiOa-h-Ytnlls2dAJo7wa60E9nQ9Se0uHxgJuHayVPEssmIm--MOCKTOKEN_EXAMPLE-0Wv5n0ZY0A","expires":"2015-10-21T15:06:44.577Z"

It’s also possible to use your API Key to retrieve the TOKEN ID used by API:

(if you don’t like using your control panel password!)

curl -s https://identity.api.rackspacecloud.com/v2.0/tokens -X 'POST' \

-d '{"auth":{"RAX-KSKEY:apiKeyCredentials":{"username":"yourUserName", "apiKey":"yourApiKey"}}}' \

-H "Content-Type: application/json" | python -m json.tool

Step 3: Construct Script to Execute Command directly thru API

#!/bin/sh

# Your Rackspace ACCOUNT DDI, look for a number like below when you login to the Rackspace mycloud controlpanel

account='10000000'

# Using the token that was returned to us in step 2

token="AAD4gu6FH-KoLCKiPWpqHONkCqGJ0YiDuO6yvQG4J1jRSjcQoZSqRK94u0jaYv5BMOCKTOKENpMsI3NEkjNqApipi0Lr2MFLjw"

# London Datacentre Endpoint, could by SYD, IAD, ORD, DFW etc

curl -v https://lon.servers.api.rackspacecloud.com/v2/$account/servers \

-X POST \

-H "X-Auth-Project-Id: $account" \

-H "Content-Type: application/json" \

-H "Accept: application/json" \

-H "X-Auth-Token: $token" \

-d '{"server": {"name": "testing-cloud-init-api", "imageRef": "09de0a66-3156-48b4-90a5-1cf25a905207", "flavorRef": "general1-1", "config_drive": "true", "user_data": "I2Nsb3VkLWNvbmZpZw0KDQpwYWNrYWdlczoNCg0KIC0gYXBhY2hlMg0KIC0gcGhwNQ0KIC0gcGhwNS1teXNxbA0KIC0gbXlzcWwtc2VydmVyDQoNCnJ1bmNtZDoNCg0KIC0gd2dldCBodHRwOi8vd29yZHByZXNzLm9yZy9sYXRlc3QudGFyLmd6IC1QIC90bXAvDQogLSB0YXIgLXp4ZiAvdG1wL2xhdGVzdC50YXIuZ3ogLUMgL3Zhci93d3cvIDsgbXYgL3Zhci93d3cvaHRtbCAvdmFyL3d3dy9odG1sX29sZDsgbXYgL3Zhci93d3cvd29yZHByZXNzIC92YXIvd3d3L2h0bWwNCiAtIG15c3FsIC1lICJjcmVhdGUgZGF0YWJhc2Ugd29yZHByZXNzOyBjcmVhdGUgdXNlciAnd3B1c2VyJ0AnbG9jYWxob3N0JyBpZGVudGlmaWVkIGJ5ICdjaGFuZ2VtZXRvbyc7IGdyYW50IGFsbCBwcml2aWxlZ2VzIG9uIHdvcmRwcmVzcyAuIFwqIHRvICd3cHVzZXInQCdsb2NhbGhvc3QnOyBmbHVzaCBwcml2aWxlZ2VzOyINCiAtIG15c3FsIC1lICJkcm9wIGRhdGFiYXNlIHRlc3Q7IGRyb3AgdXNlciAndGVzdCdAJ2xvY2FsaG9zdCc7IGZsdXNoIHByaXZpbGVnZXM7Ig0KIC0gbXlzcWxhZG1pbiAtdSByb290IHBhc3N3b3JkICdjaGFuZ2VtZSc="}}' \

| python -m json.tool

Zomg what does this mean?

X-Auth-Token: is just the header that is sent to authorise your request. You got the token using your mycloud username and password, or mycloud username and API key in step 2.

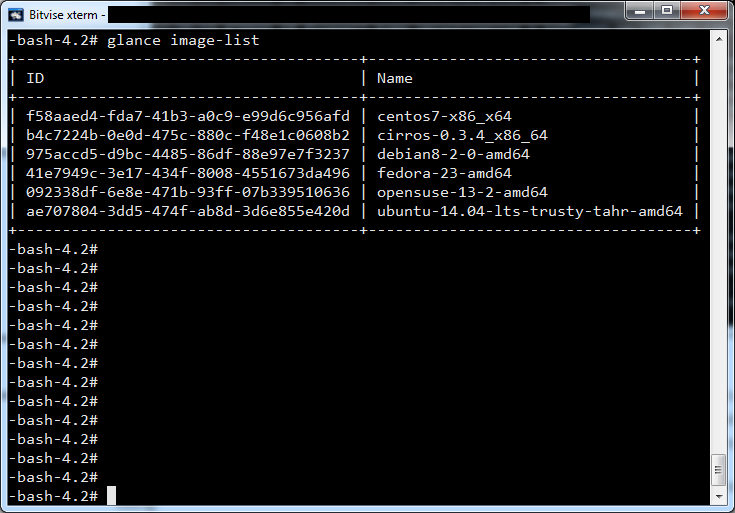

ImageRef: this is just the ID assigned to the base image of Ubuntu LTS 14.04. Take a look below at all the different images you can use (and the image id of each):

$ supernova customer image-list

| ade87903-9d82-4584-9cc1-204870011de0 | Arch 2015.7 (PVHVM) | ACTIVE | |

| fdaf64c7-d9f3-446c-bd7c-70349305ae91 | CentOS 5 (PV) | ACTIVE | |

| 21612eaf-a350-4047-b06f-6bb8a8a7bd99 | CentOS 6 (PV) | ACTIVE | |

| fabe045f-43f8-4991-9e6c-5cabd617538c | CentOS 6 (PVHVM) | ACTIVE | |

| 6595f1b7-e825-4bd2-addc-c7b1c803a37f | CentOS 7 (PVHVM) | ACTIVE | |

| 2c12f6da-8540-40bc-b974-9a72040173e0 | CoreOS (Alpha) | ACTIVE | |

| 8dc7d5d8-4ad4-41b6-acf1-958dfeadcb17 | CoreOS (Beta) | ACTIVE | |

| 415ca2e6-df92-44e6-ba95-8ee36b436b24 | CoreOS (Stable) | ACTIVE | |

| eaaf94d8-55a6-4bfa-b0a8-473febb012dc | Debian 7 (Wheezy) (PVHVM) | ACTIVE | |

| c3aacaf9-8d1e-4d41-bb47-045fbc392a1c | Debian 8 (Jessie) (PVHVM) | ACTIVE | |

| 081a8b12-515c-41c9-8ce4-13139e1904f7 | Debian Testing (Stretch) (PVHVM) | ACTIVE | |

| 498c59a0-3c26-4357-92c0-dd938baca3db | Debian Unstable (Sid) (PVHVM) | ACTIVE | |

| 46975098-7799-4e72-8ae0-d6ef9d2d26a1 | Fedora 21 (PVHVM) | ACTIVE | |

| 0976b31e-f6d7-4d74-81e9-007fca25067e | Fedora 22 (PVHVM) | ACTIVE | |

| 7a1cf8de-7721-4d56-900b-1e65def2ada5 | FreeBSD 10 (PVHVM) | ACTIVE | |

| 7451d607-426d-416f-8d29-97e57f6f3ad5 | Gentoo 15.3 (PVHVM) | ACTIVE | |

| 79436148-753f-41b7-aee9-5acbde16582c | OpenSUSE 13.2 (PVHVM) | ACTIVE | |

| 05dd965d-84ce-451b-9ca1-83a134e523c3 | Red Hat Enterprise Linux 5 (PV) | ACTIVE | |

| 783f71f4-d2d8-4d38-b2e1-8c916de79a38 | Red Hat Enterprise Linux 6 (PV) | ACTIVE | |

| 5176fde9-e9d6-4611-9069-1eecd55df440 | Red Hat Enterprise Linux 6 (PVHVM) | ACTIVE | |

| 92f8a8b8-6019-4c27-949b-cf9910b84ffb | Red Hat Enterprise Linux 7 (PVHVM) | ACTIVE | |

| 36076d08-3e8b-4436-9253-7a8868e4f4d7 | Scientific Linux 6 (PVHVM) | ACTIVE | |

| 6118e449-3149-475f-bcbb-99d204cedd56 | Scientific Linux 7 (PVHVM) | ACTIVE | |

| 656e65f7-6441-46e8-978d-0d39beaaf559 | Ubuntu 12.04 LTS (Precise Pangolin) (PV) | ACTIVE | |

| 973775ab-0653-4ef8-a571-7a2777787735 | Ubuntu 12.04 LTS (Precise Pangolin) (PVHVM) | ACTIVE | |

| 5ed162cc-b4eb-4371-b24a-a0ae73376c73 | Ubuntu 14.04 LTS (Trusty Tahr) (PV) | ACTIVE | |

| ***09de0a66-3156-48b4-90a5-1cf25a905207*** | Ubuntu 14.04 LTS (Trusty Tahr) (PVHVM) | ACTIVE | |

| 658a7d3b-4c58-4e29-b339-2509cca0de10 | Ubuntu 15.04 (Vivid Vervet) (PVHVM) | ACTIVE | |

| faad95b7-396d-483e-b4ae-77afec7e7097 | Vyatta Network OS 6.7R9 | ACTIVE | |

| ee71e392-12b0-4050-b097-8f75b4071831 | Windows Server 2008 R2 SP1 | ACTIVE | |

| 5707f82f-43f0-41e0-8e51-bfb597852825 | Windows Server 2008 R2 SP1 + SQL Server 2008 R2 SP2 Standard | ACTIVE | |

| b684e5a0-11a8-433e-a4b8-046137783e1b | Windows Server 2008 R2 SP1 + SQL Server 2008 R2 SP2 Web | ACTIVE | |

| d16fd3df-3b24-49ee-ae6a-317f450006e7 | Windows Server 2012 | ACTIVE | |

| f495b41d-07e1-44c5-a3e8-65c4412a7eb8 | Windows Server 2012 + SQL Server 2012 SP1 Standard | ACTIVE | |

flavorRef: is simply referring to what server type to start up, it’s pretty darn simple

$ supernova lon flavor-list

+------------------+-------------------------+-----------+------+-----------+------+-------+-------------+-----------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

+------------------+-------------------------+-----------+------+-----------+------+-------+-------------+-----------+

| 2 | 512MB Standard Instance | 512 | 20 | 0 | | 1 | | N/A |

| 3 | 1GB Standard Instance | 1024 | 40 | 0 | | 1 | | N/A |

| 4 | 2GB Standard Instance | 2048 | 80 | 0 | | 2 | | N/A |

| 5 | 4GB Standard Instance | 4096 | 160 | 0 | | 2 | | N/A |

| 6 | 8GB Standard Instance | 8192 | 320 | 0 | | 4 | | N/A |

| 7 | 15GB Standard Instance | 15360 | 620 | 0 | | 6 | | N/A |

| 8 | 30GB Standard Instance | 30720 | 1200 | 0 | | 8 | | N/A |

| compute1-15 | 15 GB Compute v1 | 15360 | 0 | 0 | | 8 | | N/A |

| compute1-30 | 30 GB Compute v1 | 30720 | 0 | 0 | | 16 | | N/A |

| compute1-4 | 3.75 GB Compute v1 | 3840 | 0 | 0 | | 2 | | N/A |

| compute1-60 | 60 GB Compute v1 | 61440 | 0 | 0 | | 32 | | N/A |

| compute1-8 | 7.5 GB Compute v1 | 7680 | 0 | 0 | | 4 | | N/A |

| general1-1 | 1 GB General Purpose v1 | 1024 | 20 | 0 | | 1 | | N/A |

| general1-2 | 2 GB General Purpose v1 | 2048 | 40 | 0 | | 2 | | N/A |

| general1-4 | 4 GB General Purpose v1 | 4096 | 80 | 0 | | 4 | | N/A |

| general1-8 | 8 GB General Purpose v1 | 8192 | 160 | 0 | | 8 | | N/A |

| io1-120 | 120 GB I/O v1 | 122880 | 40 | 1200 | | 32 | | N/A |

| io1-15 | 15 GB I/O v1 | 15360 | 40 | 150 | | 4 | | N/A |

| io1-30 | 30 GB I/O v1 | 30720 | 40 | 300 | | 8 | | N/A |

| io1-60 | 60 GB I/O v1 | 61440 | 40 | 600 | | 16 | | N/A |

| io1-90 | 90 GB I/O v1 | 92160 | 40 | 900 | | 24 | | N/A |

| memory1-120 | 120 GB Memory v1 | 122880 | 0 | 0 | | 16 | | N/A |

| memory1-15 | 15 GB Memory v1 | 15360 | 0 | 0 | | 2 | | N/A |

| memory1-240 | 240 GB Memory v1 | 245760 | 0 | 0 | | 32 | | N/A |

| memory1-30 | 30 GB Memory v1 | 30720 | 0 | 0 | | 4 | | N/A |

| memory1-60 | 60 GB Memory v1 | 61440 | 0 | 0 | | 8 | | N/A |

| performance1-1 | 1 GB Performance | 1024 | 20 | 0 | | 1 | | N/A |

| performance1-2 | 2 GB Performance | 2048 | 40 | 20 | | 2 | | N/A |

| performance1-4 | 4 GB Performance | 4096 | 40 | 40 | | 4 | | N/A |

| performance1-8 | 8 GB Performance | 8192 | 40 | 80 | | 8 | | N/A |

| performance2-120 | 120 GB Performance | 122880 | 40 | 1200 | | 32 | | N/A |

| performance2-15 | 15 GB Performance | 15360 | 40 | 150 | | 4 | | N/A |

| performance2-30 | 30 GB Performance | 30720 | 40 | 300 | | 8 | | N/A |

| performance2-60 | 60 GB Performance | 61440 | 40 | 600 | | 16 | | N/A |

| performance2-90 | 90 GB Performance | 92160 | 40 | 900 | | 24 | | N/A |

+------------------+-------------------------+-----------+------+-----------+------+-------+-------------+-----------+